Benchmark of Python JSON libraries

A couple of weeks ago after spending some time with Python profiler, I discovered that Python’s json module is not as fast as I expected. I decided to benchmark alternative JSON libraries.

Libraries #

simplejson 3.8.2

ujson 1.35

python-rapidjson 0.0.6

python-cjson, yajl-py and jsonlib are not included in the benchmark, they are not in active development and don’t support Python 3.

simplejson and ujson may be used as a drop-in replacement for the standard json module, but ujson doesn’t support advanced features like hooks, custom encoders and decoders.

You can change your imports this way to use an alternative library:

import ujson as jsonInterpreters #

Python (CPython) 2.7.12

Python (CPython) 3.5.2

PyPy 5.3.0

Methodology #

The tests were performed on MacBook Pro Late 2013 (2.6 GHz Intel Core i5, 8 GB 1600 MHz DDR3, Mac OS 10.11.6). Every test runs 100 times.

File name |

File size |

Description |

|---|---|---|

twitter.json |

632 KB |

Single large JSON (source) |

one-json-per-line.jsons.txt |

176 KB |

Collection of 1000 JSON objects (source) |

I published the source code of the benchmark on GitHub. You can clone it and rerun if you want to check it by yourself or if a new version of an alternative JSON library is released.

Results #

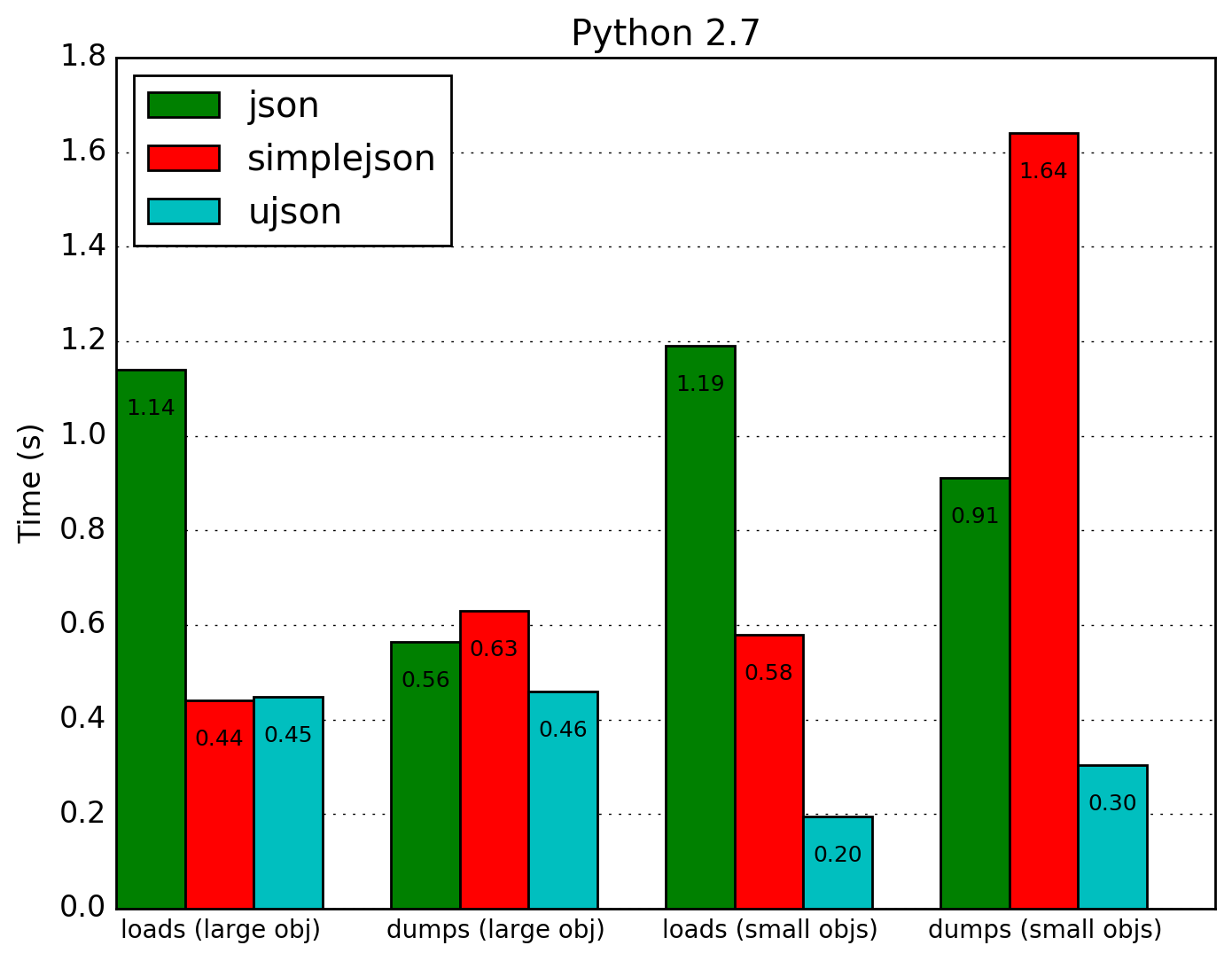

json |

simplejson |

ujson |

|

|---|---|---|---|

loads (large obj) |

1.140 |

0.441 |

0.448 |

dumps (large obj) |

0.564 |

0.630 |

0.459 |

loads (small objs) |

1.190 |

0.579 |

0.195 |

dumps (small objs) |

0.910 |

1.641 |

0.304 |

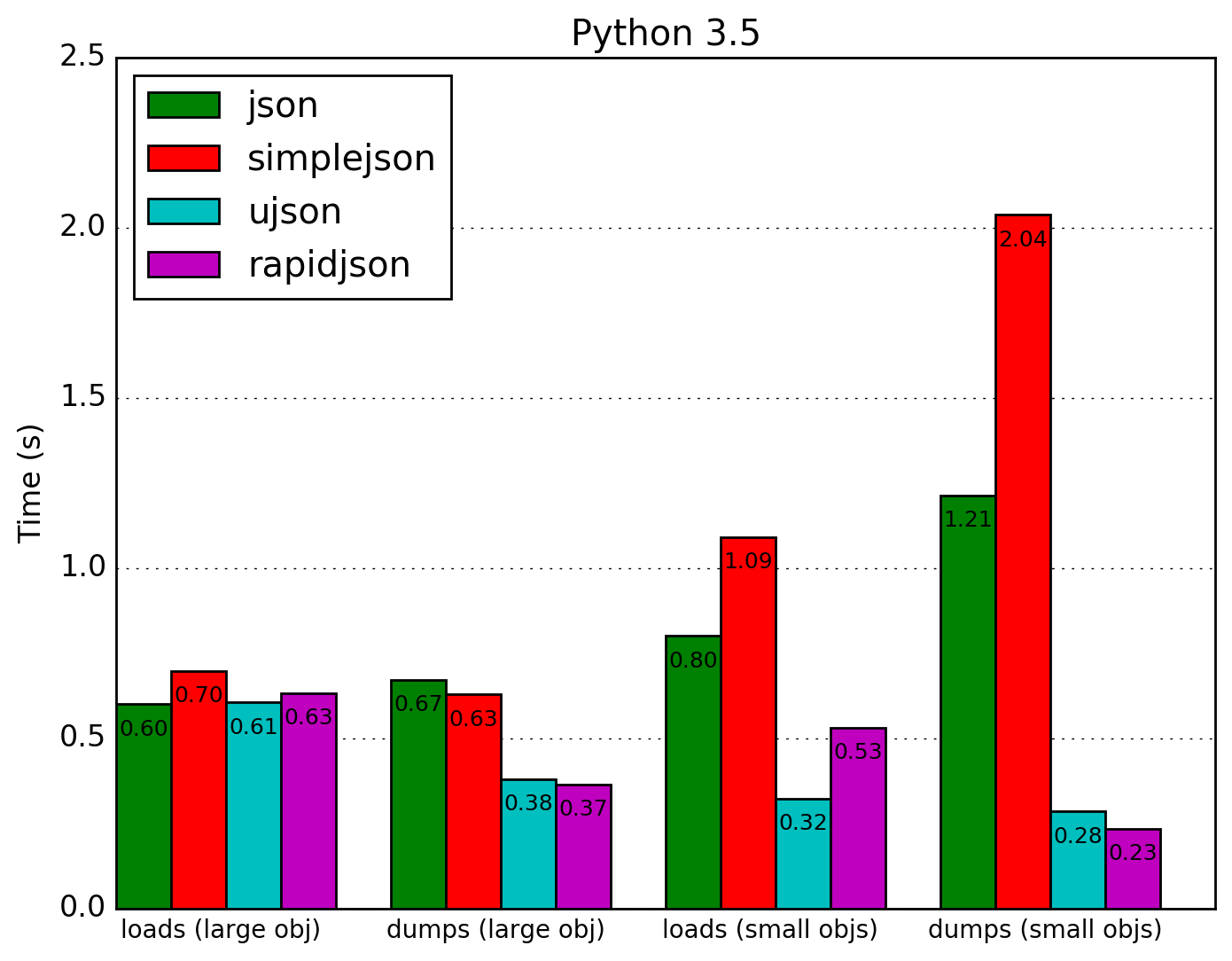

json |

simplejson |

ujson |

rapidjson |

|

|---|---|---|---|---|

loads (large obj) |

0.600 |

0.698 |

0.605 |

0.634 |

dumps (large obj) |

0.673 |

0.629 |

0.381 |

0.365 |

loads (small objs) |

0.801 |

1.091 |

0.322 |

0.531 |

dumps (small objs) |

1.213 |

2.038 |

0.285 |

0.234 |

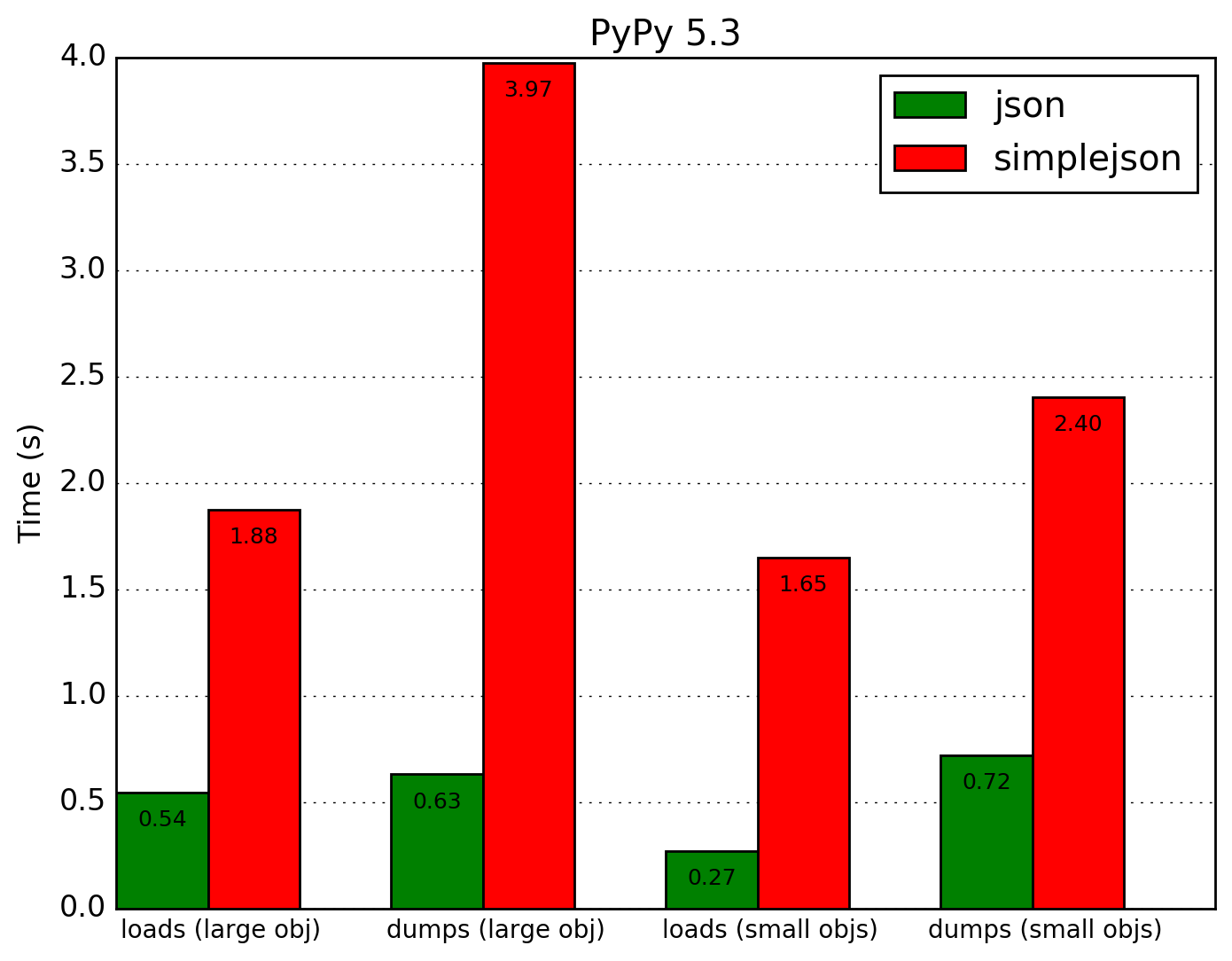

json |

simplejson |

|

|---|---|---|

loads (large obj) |

0.545 |

1.876 |

dumps (large obj) |

0.632 |

3.974 |

loads (small objs) |

0.271 |

1.651 |

dumps (small objs) |

0.719 |

2.404 |

Conclusion #

The numbers speak for themselves. If your application is dealing with a big amount of JSON data and doesn't use any advanced features of built-in json module, you should probably consider switching to ujson.